首先安装elk

推荐大家到elasic中文社区去下载 👉 【传送】

⚠️ elastcisearch | logstash | kibana 的版本最好保持一直,否则会出现很多坑的,切记!

安装ELK的步骤这里就不做介绍了,可以查看 👉 【TODO】

下载安装canal-adapter

canal github传送门 👉 【Alibaba Canal】

canal-client 模式

可以参照canal给出的example项目和官方文档给出的例子来测试

依赖配置

1 | <dependency> |

创建maven项目

保证canal-server 已经正确启动 👈 然后启动下面服务,操作数据库即可看到控制台的日志输出;

1 | package com.redtom.canal.deploy; |

canal-adapter 模式

adapter 配置文件如下

1 | server: |

我的elasticsearch是7.10.0版本的application.yml bootstrap.yml es6 es7 hbase kudu logback.xml META-INF rdb

所以:👇

1 | cd es7 |

customer.yml 配置文件如下:

1 | dataSourceKey: defaultDS |

创建表结构

1 | CREATE TABLE `customer` ( |

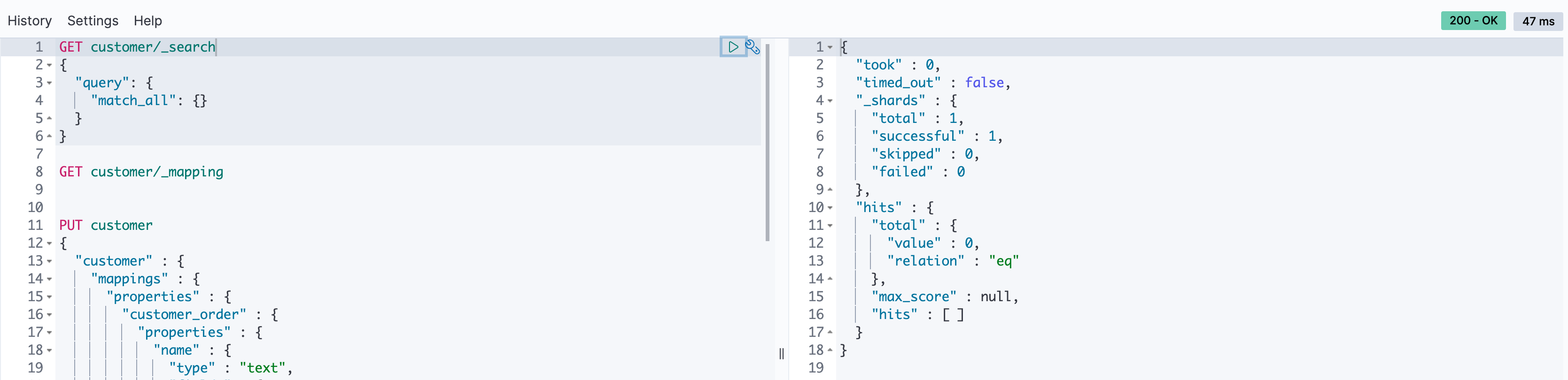

创建索引

1 | PUT customer |

测试canal-adapter同步效果

创建一条记录

1 | 2021-07-05 11:50:53.725 [pool-3-thread-1] DEBUG c.a.o.canal.client.adapter.es.core.service.ESSyncService - DML: {"data":[{"id":1,"name":"1","email":"1","order_id":1,"order_serial":"1","order_time":1625457046000,"customer_order":"1","c_time":1625457049000}],"database":"redtom_dev","destination":"example","es":1625457053000,"groupId":"g1","isDdl":false,"old":null,"pkNames":[],"sql":"","table":"customer","ts":1625457053724,"type":"INSERT"} |

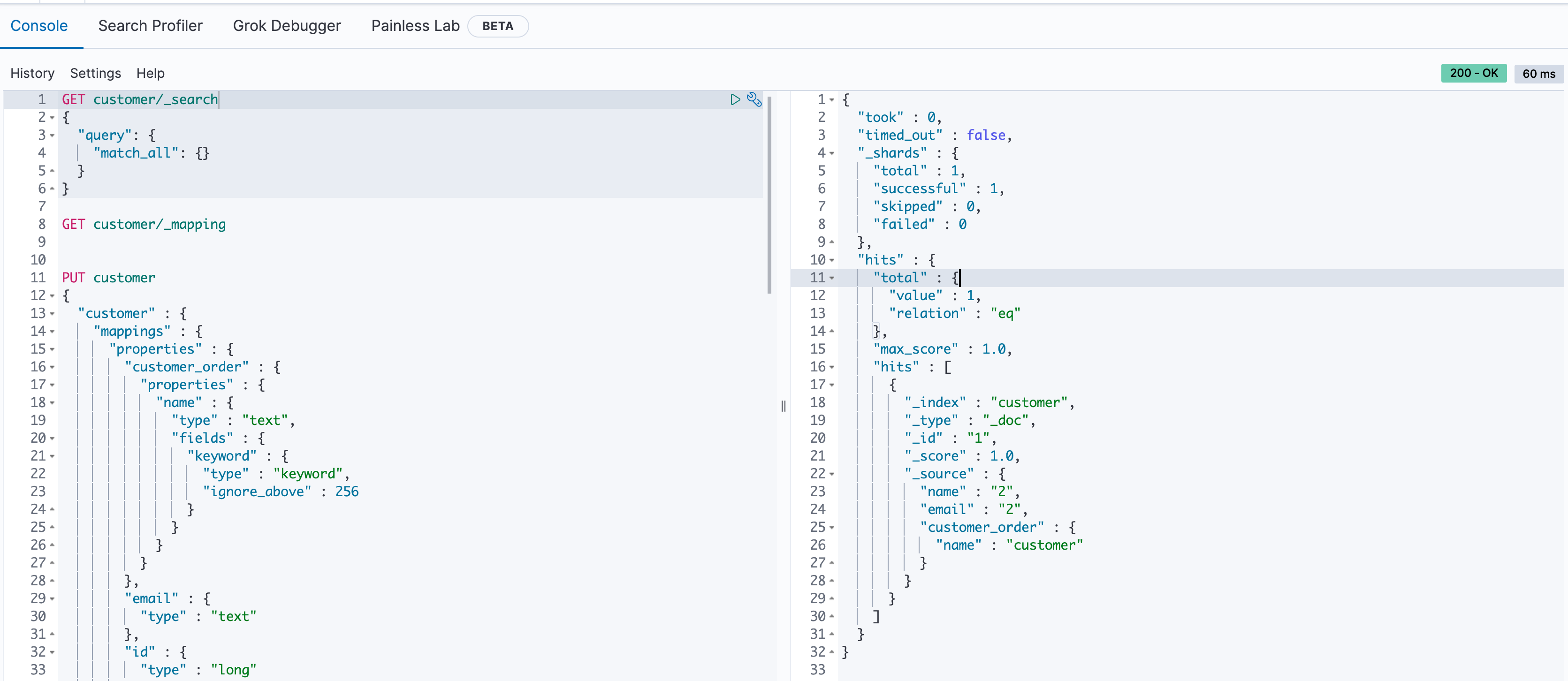

Elastcisearch 效果

1 | { |

修改数据

1 | 2021-07-05 11:54:36.402 [pool-3-thread-1] DEBUG c.a.o.canal.client.adapter.es.core.service.ESSyncService - DML: {"data":[{"id":1,"name":"2","email":"2","order_id":2,"order_serial":"2","order_time":1625457046000,"customer_order":"2","c_time":1625457049000}],"database":"redtom_dev","destination":"example","es":1625457275000,"groupId":"g1","isDdl":false,"old":[{"name":"1","email":"1","order_id":1,"order_serial":"1","customer_order":"1"}],"pkNames":[],"sql":"","table":"customer","ts":1625457276401,"type":"UPDATE"} |

Elastcisearch 效果

删除一条数据

1 | 2021-07-05 11:56:51.524 [pool-3-thread-1] DEBUG c.a.o.canal.client.adapter.es.core.service.ESSyncService - DML: {"data":[{"id":1,"name":"2","email":"2","order_id":2,"order_serial":"2","order_time":1625457046000,"customer_order":"2","c_time":1625457049000}],"database":"redtom_dev","destination":"example","es":1625457411000,"groupId":"g1","isDdl":false,"old":null,"pkNames":[],"sql":"","table":"customer","ts":1625457411523,"type":"DELETE"} |

Elastcisearch 效果